ISSUE

An estimated 2.5 million pedocriminals are online every second.

At the same time, our children’s screen time is increasing. They are constantly exposed to this danger.

Of an overall average daily screen time between 6 and 9 hours, children and adolescents spend more than 3 hours with gaming, video chatting and on social media.

A growing danger lurks in the shadow of digital progress: one in five children between the ages of eight and eighteen has been attacked at least once in digital channels.

Within 19 seconds conversations with children on social gaming platforms can escalate into high-risk grooming situations.

Grooming involves building a relationship with children to prepare them for abuse by manipulating trust and using fear and shame to maintain silence.

Groomer: Hey, you seem cool. What games do you like?

Child: Hi! I love Minecraft and Fortnite.

Groomer: Nice! I play those too. We should game together sometime. Want some tips?

Child: Sure, that would be fun!

Groomer: Great! You can always talk to me about anything, okay? 😊

Child: Thanks

Children’s constant access to digital devices has heightened sexting and the sharing of non-consensual intimate images.

Cool, got any pics of you?

Maybe, why?

Just curious. Show me one, I’ll send you one too.

Okay, here. 🙂

You look awesome! How about one without your shirt?

I don’t know…

It’ll be our secret. Here is one of me

34% of surveyed girls and 23% of boys have been confronted online with intimate or suggestive questions that they did not want to answer.

Children and young people are often persuaded to send nude selfies of themselves. This is called Child Sexual Abuse Material (CSAM). CSAM includes any nude or sexual image or video of someone under the age of 18 (or someone who is depicted as being under the age of 18).

do you like it? send me one too

not sure

please, it’ll be our secret

okey, here’s one. but please keep it to yourself

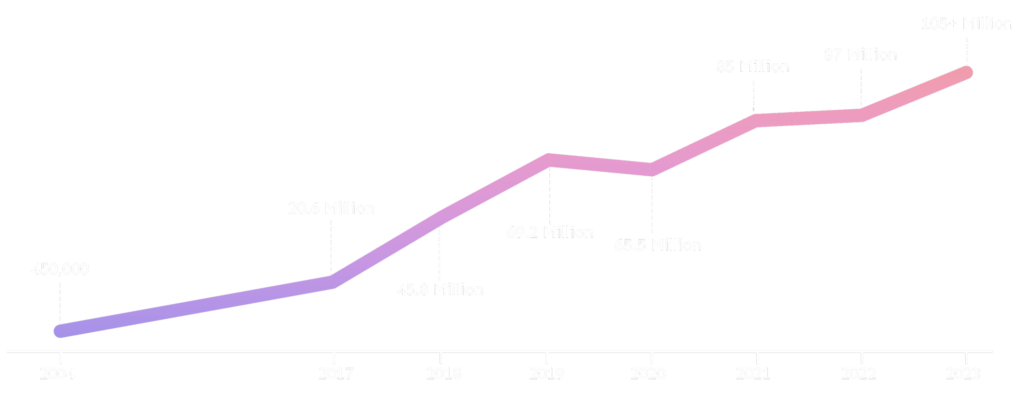

450,000

files of suspected CSAM reported.In 2023, there were over

105 Million

files of suspected CSAM reported.

(Source: Thorn Foundation, 2024)

CSAM = Child Sexual Abuse Material

But trust is often abused.

Sextortion involves using sexual images to coerce victims, often for financial gain. 84% of victims did not seek help because they were too embarrassed or ashamed. Unfortunately, sextortion greatly increases the risk of suicide.

Transfer 500 francs to me now or I’ll upload the picture online and show it to all your friends.

whaat? but i don’t have that much money

fast i need it now. send me the credit card details

wait, wait I have to get the wallet…

1-2 affected children are in each school class on average

As the internet never forgets, victims are repeatedly approached about images and videos. They therefore experience the trauma again and again.

We want to put an end to this terrible situation.

We are developing a software combatting these attacks, which can be installed on any digital device (computers, smartphones, and tablets) and protects the child from harmful images, videos, texts and accesses.

This AI based application works similar to an ad blocker on the device. It actively prevents any attack on text, audio, video and image content.