Step 5:

Step 5: Parents can decide what happens to suspicious data (delete or report case). If they choose to report the case, it will be sent to the appropriate authority for further investigation.

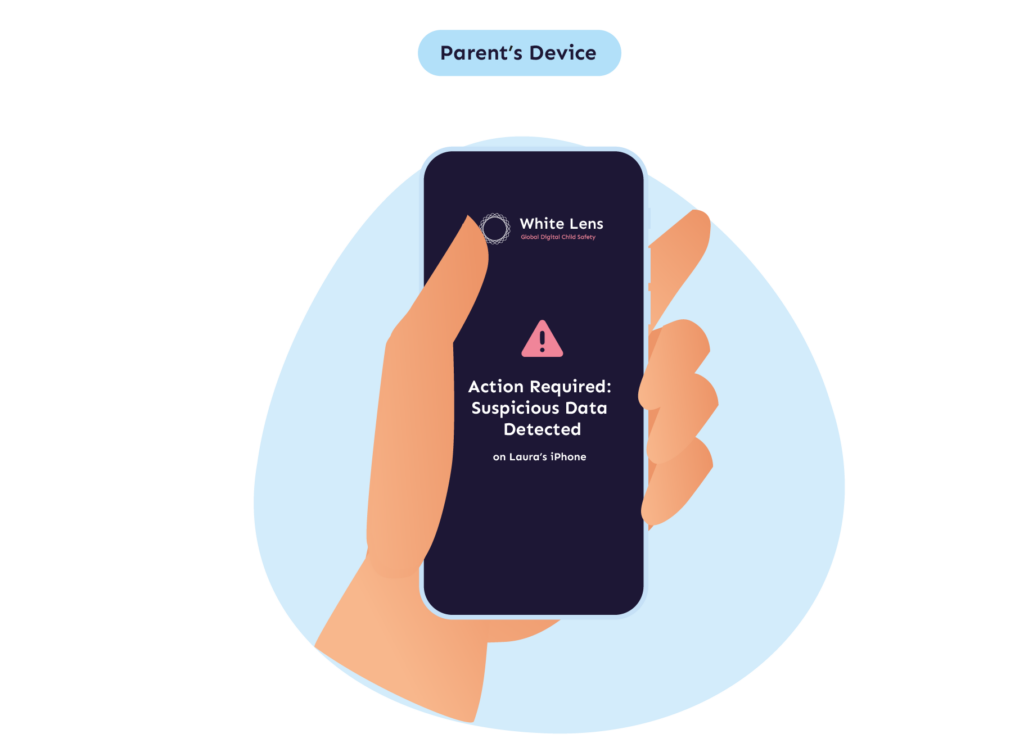

Step 4:

Step 4: The app sends alerts to parents about suspicious activity. They receive notifications only for identified threats, respecting your child’s privacy and independence.

Step 3:

Step 3: It blocks harmful content and notifies the child, explaining why it was blocked.

Step 2:

Step 2: The app analyzes audio, video, images, and text (both sent and received) for harmful and illegal content, particularly related to sexual abuse and pornography. This proactive analysis happens before the child can see or send the content, as we intercept data before it is encrypted.

Step 1:

Step 1: Install our app on both the child’s and the parent’s digital devices, including smartphones, tablets, and laptops. Our app operates discreetly in the background, providing protection without disrupting the device’s functionality.

Sextortion

Sextortion involves using sexual images to coerce victims, often for financial gain. 84% of victims did not seek help because they were too embarrassed or ashamed. Unfortunately, sextortion greatly increases the risk of suicide.

CSAM

Child Sexual Abuse Material, including AI-generated content, involves images, videos, or texts depicting child abuse in all conceivable forms.

Sexting & Non-Consensual Intimate Imagery

Children’s constant access to digital devices has heightened sexting and the sharing of non-consensual intimate images. As the internet never forgets, victims are repeatedly approached about images and videos. They therefore experience the trauma again and again.

Grooming

Grooming involves building a relationship with children to prepare them for abuse by manipulating trust and using fear and shame to maintain silence. Immediate action is vital to protect children from these deceptive practices with lasting psychological impacts.

Anouk Marniku